Configure NSX-T Baremetal Edge Node redundant MGMT plane

Recently i had to reconfigure the NSX-T Baremetal edge Nodes which were configured with only 1 NIC for the management plane to a more redundant setup. In this short write up i will show you which steps i took.

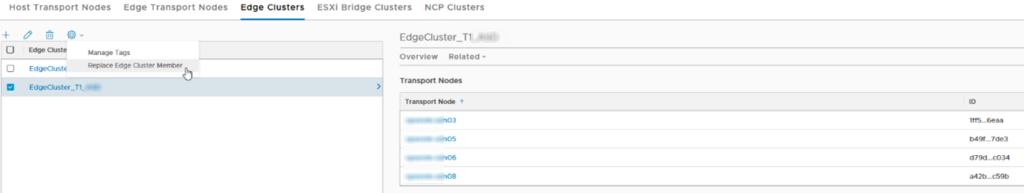

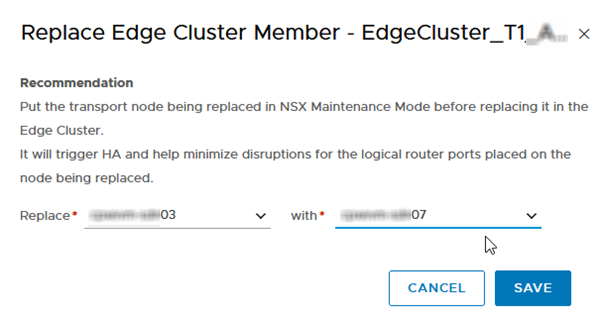

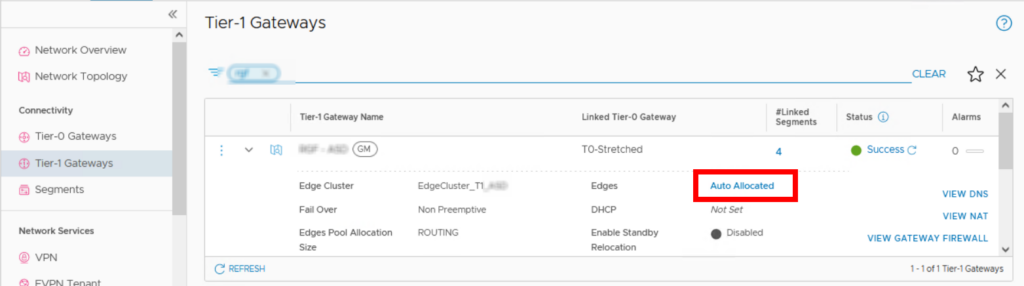

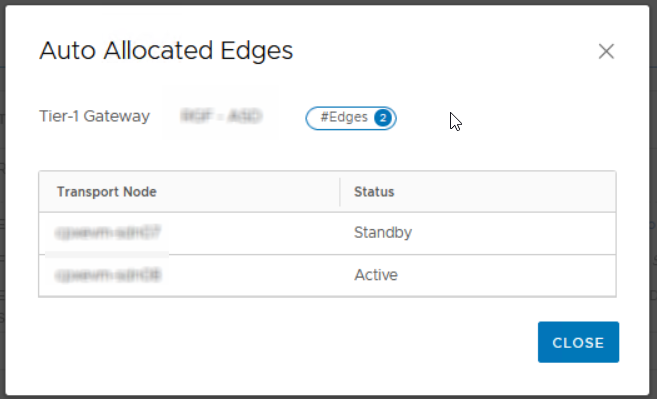

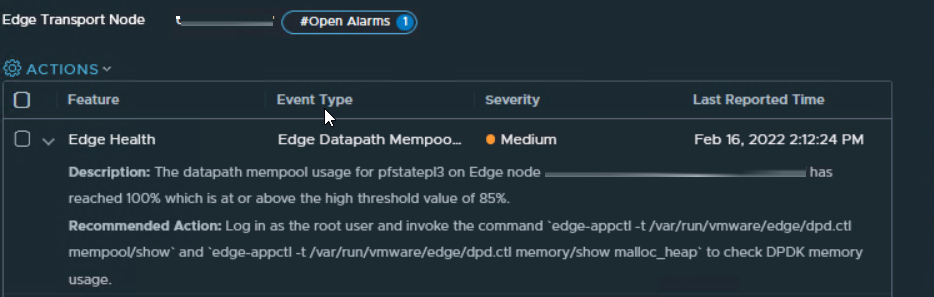

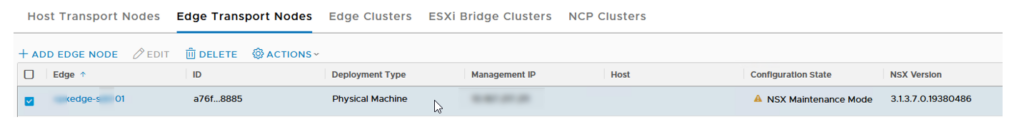

NSX Maintenance Mode

Before proceeding place the Baremetal Edge Node in Maintenance Mode to Failover the DataPath to the other Edge Node in the Cluster. We do this just in case we run into an issue during the reconfiguration. The reconfigure itself should not affect the Dataplane.

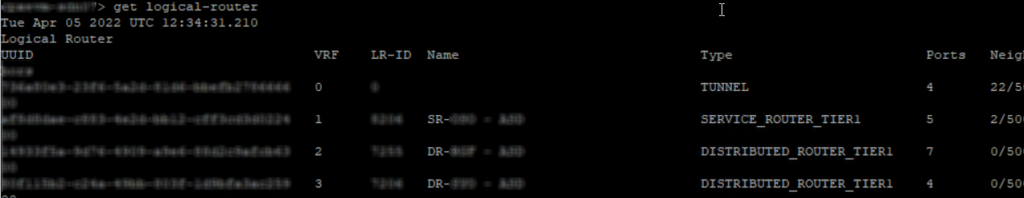

Check Current Config

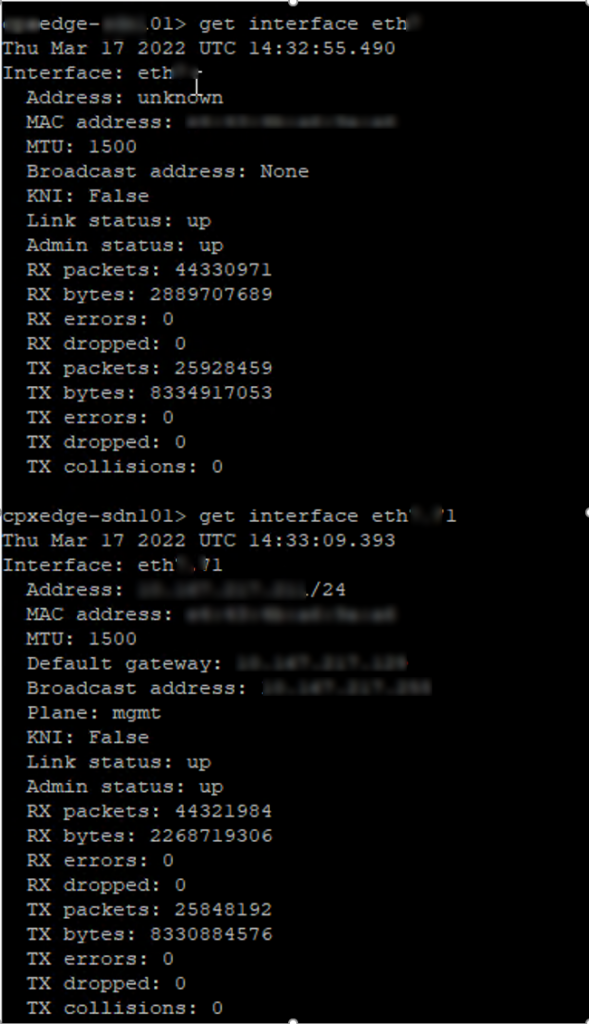

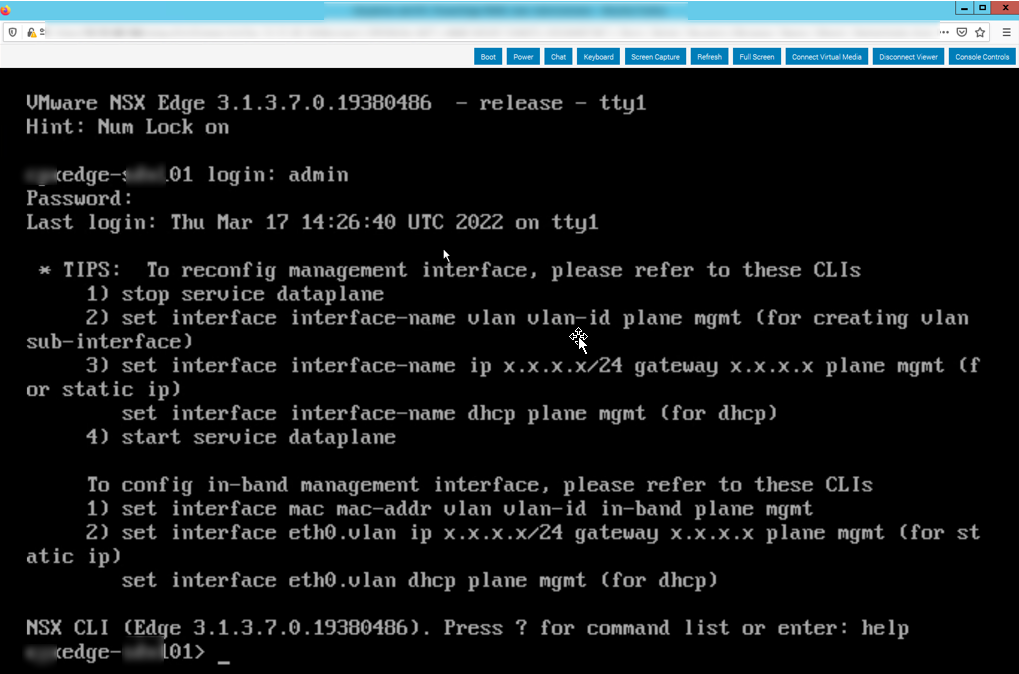

SSH to the Baremetal Edge Node and login with the admin credentials and check the current config of the management interface.

On this Baremetal Edge Node we use out-of-band management interface to connect to a management VLAN X on ETHX. The port on the switch is configured as Trunk Port, the same configuration is used when we create the bond later on.

If you use an access port or native vlan on the trunk port the commands are slightly different, the interface ethX.X doesn’t have to be created and the mgmt plane is configured directly on the ethX.

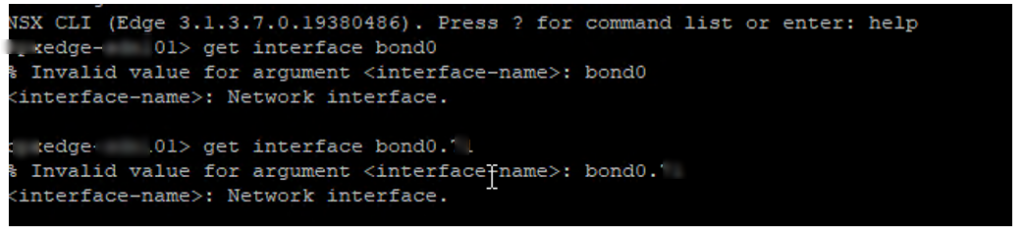

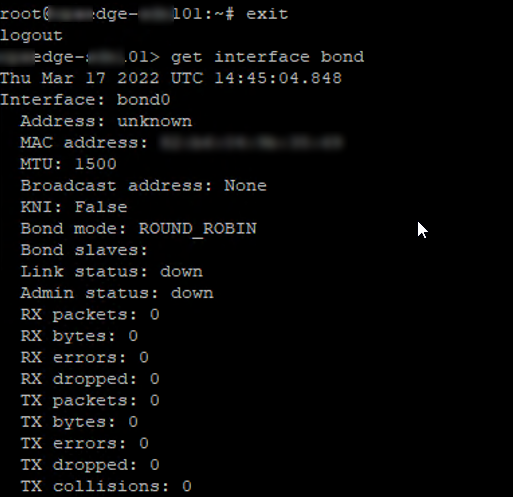

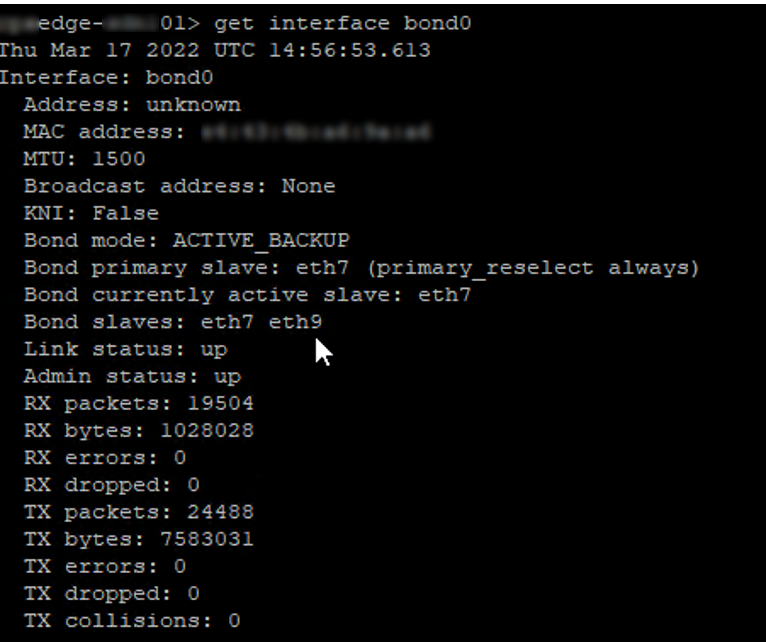

First check if the Bond0 interface exists:

The bond0 or bond0.X interface (where X is the vlan number used) does not exist, we have to create the bond interface at root level.

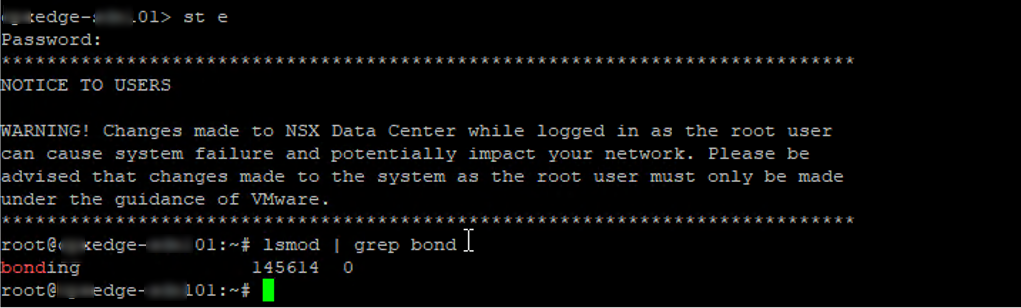

Login as root with the command: st e and check if te bond driver is installed: lsmod |grep bond

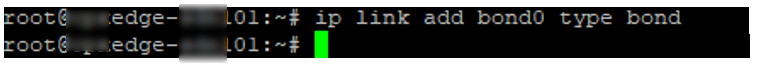

The driver is installed, we can create the bond interface with the following command: ip link add bond0 type bond

Exit root and check if the bond interface is created: get interface bond

The bond0 interface is now created and can be used.

Reconfigure the MGMT plane

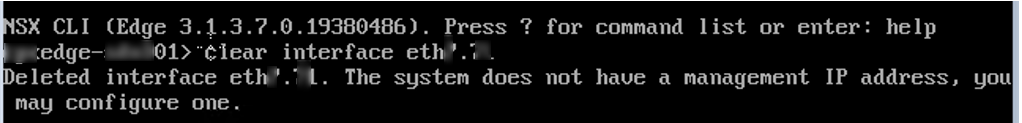

Before we can reconfigure the management plane from the EthX to the Bond0 interface we have to clear the current configuration, the SSH connection will be disconnected so connect to the terminal of the Node:

Remove the config from EthX.X

stop service dataplane clear interface ethX.X

if you don’t use a VLAN the commands are:stop service dataplane

clear interface ethX ip

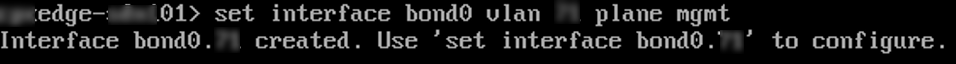

Create an bond0 interface with VLAN X (skip this step when not using VLAN):set interface bond0 vlan X plane mgmt

configure the bond0.X interface:set interface bond0.X ip x.x.x.x/x gateway x.x.x.x plane mgmt mode active-backup members eth1,eth2 primary eth1

If you don’t use VLAN the commands are:

set interface bond0 ip x.x.x.x/x gateway x.x.x.x plane mgmt mode active-backup members eth1,eth2 primary eth1

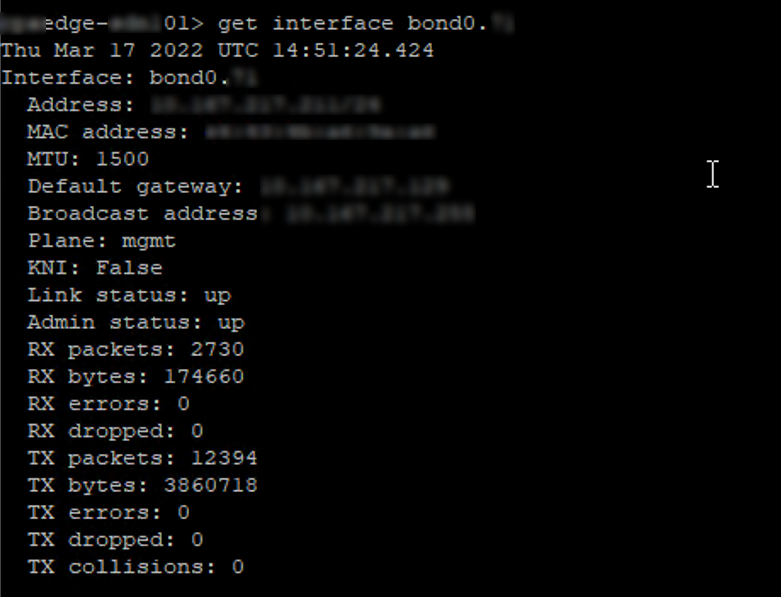

That did the trick, the management interface is now up and configured in HA

Check the bond interfaces on the Baremetal Edge Node:

You can now start the dataplane again (start service dataplane) and exit the Bare Metal Edge Node from Maintenance Mode, wait till all signs turn green!