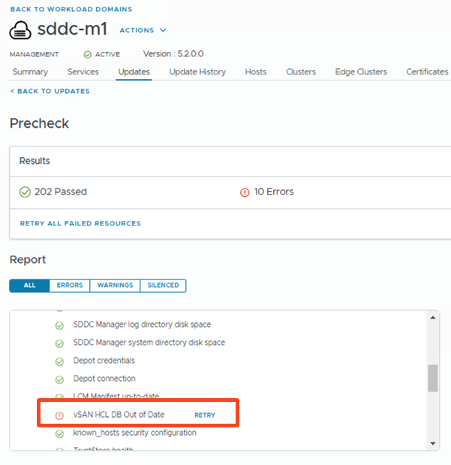

vSAN HCL DB Out of Date – Offline Update

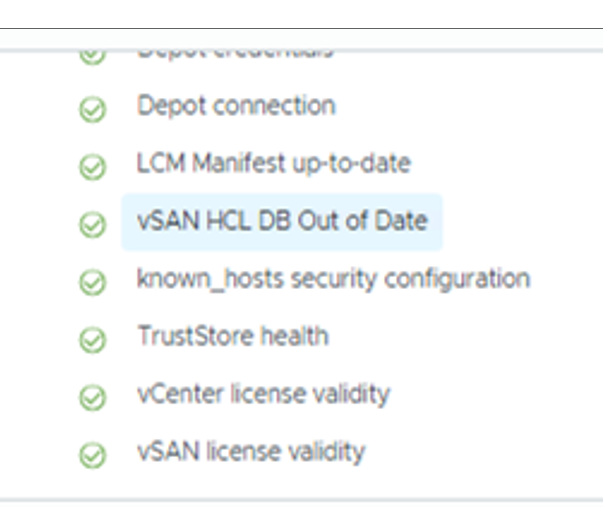

During the upgrade pre-check test of the Workload domain I got the following error: vSAN HCL DB Out of Date.

Because my SDDC manager has no direct internet connection I needed to get the file on my jump host. This can be done by browsing to https://partnerweb.vmware.com/service/vsan/all.json and copy the entire content and create a new file with extension”.json”

This can also be done via the bundle-transfer util, and you also should be able to transfer the file to the SDDC manager with this util. How to get the tool and install it can be found on https://www.aaronrombaut.com/real-world-use-of-vmware-bundle-transfer-utility/

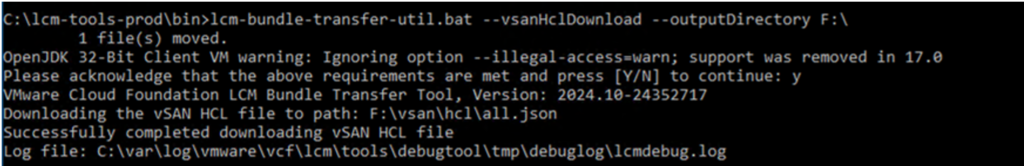

If you have the bundle-transfer util installed we can get the vSAN HCL file with the following command:

lcm-bundle-transfer-util.bat --vsanHclDownload --outputDirectory F:\

After I got the all.json file on my jump-host, I need to update it to the SDDC manager:

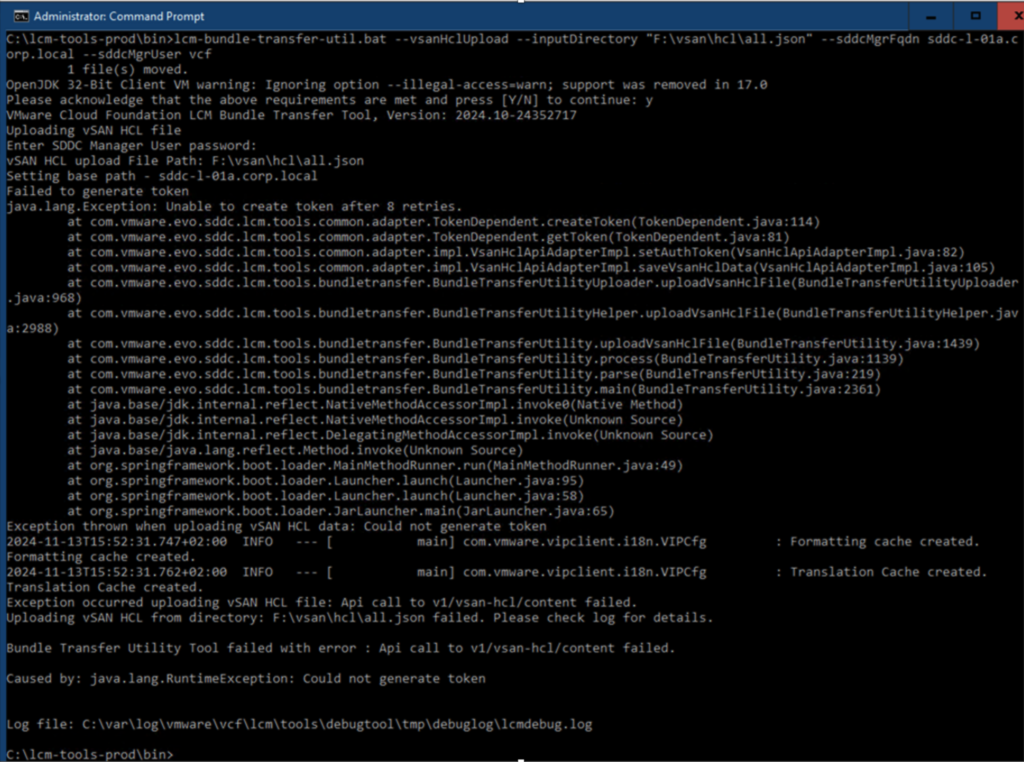

lcm-bundle-transfer-util.bat --vsanHclUpload --inputDirectory "F:\vsan\hcl\all.json" --sddcMgrFqdn sddc-l-01a.corp.local --sddcMgrUser vcf

But as you can see below this command gave me an error:

Fails due to not generating token, let’s see is we can upload the file by API, let’s use Postman for this.

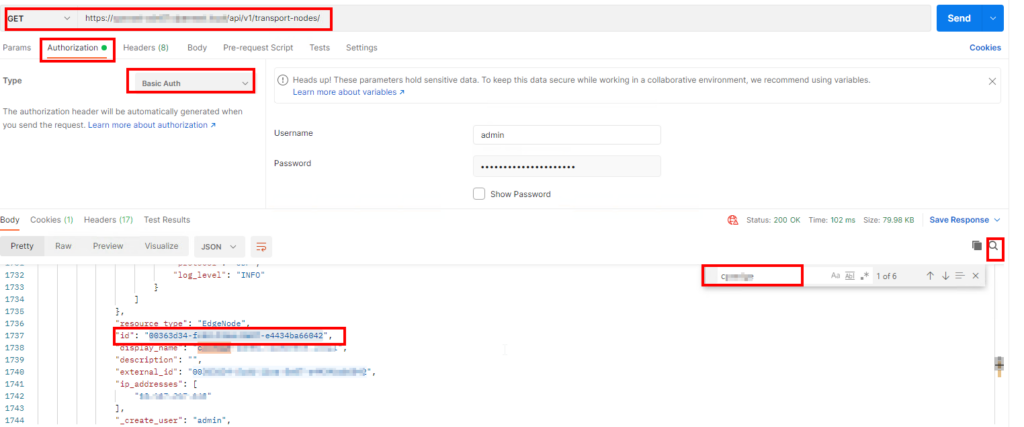

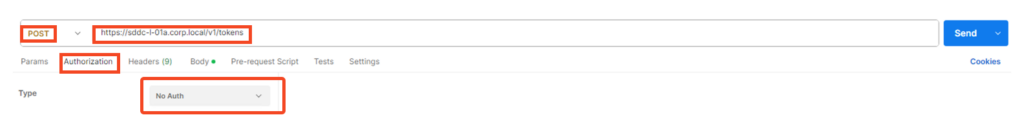

Get Bearer token for the authentication with the SDDC manager:

- Select POST as action

- Fill in the api url:

https://sddc-l-01a.corp.local/v1/tokens - Under the Authorization tab select No Auth

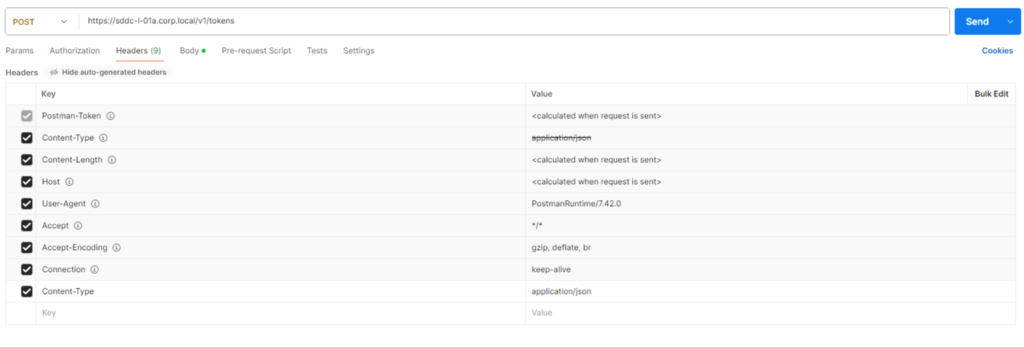

- Under Headers create a new Key

Content-Typewith Valueapplication/json

- Under Body select raw and JSON, in the field, put the sso admin and password for the SDDC Manager

{

"username" : "administrator@vsphere.local",

"password" : "XXXXXXX"

}

- Now hit the

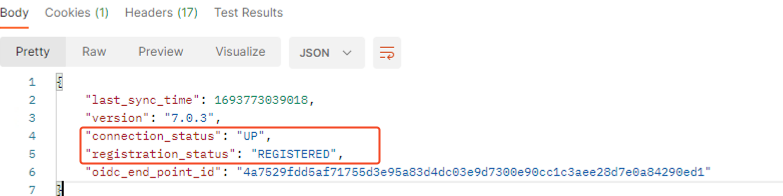

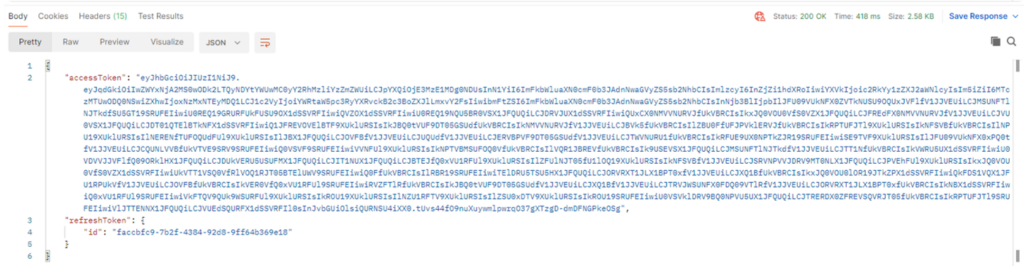

sendbutton and wait for the output:

The bearer token is needed in the command to update the HCL DB on the SDDC manager:

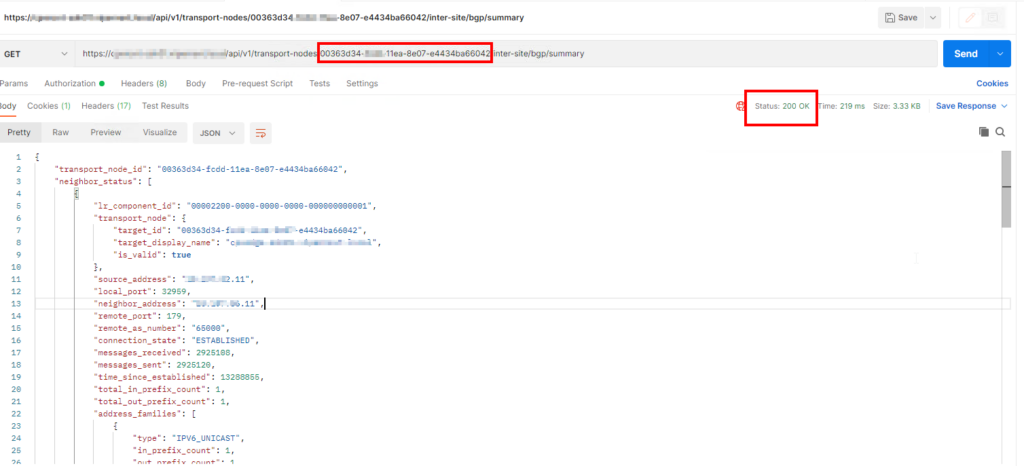

Upload all.json to SDDC Manager via Postman:

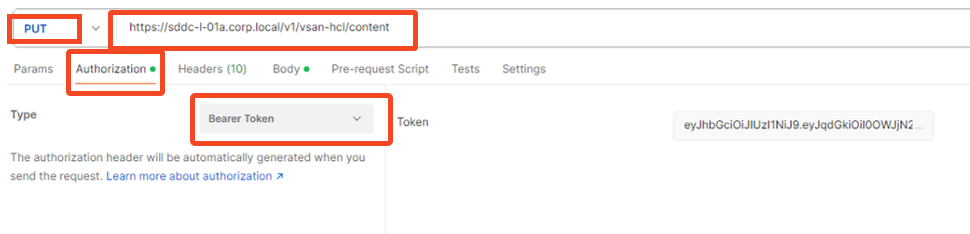

- Select PUT as Action

- Use the following API URL:

https://<sddc-fqdn>/v1/vsan-hcl/content - Under

AuthorizationselectBearer Tokenand Paste the Token from the previous step in the field.

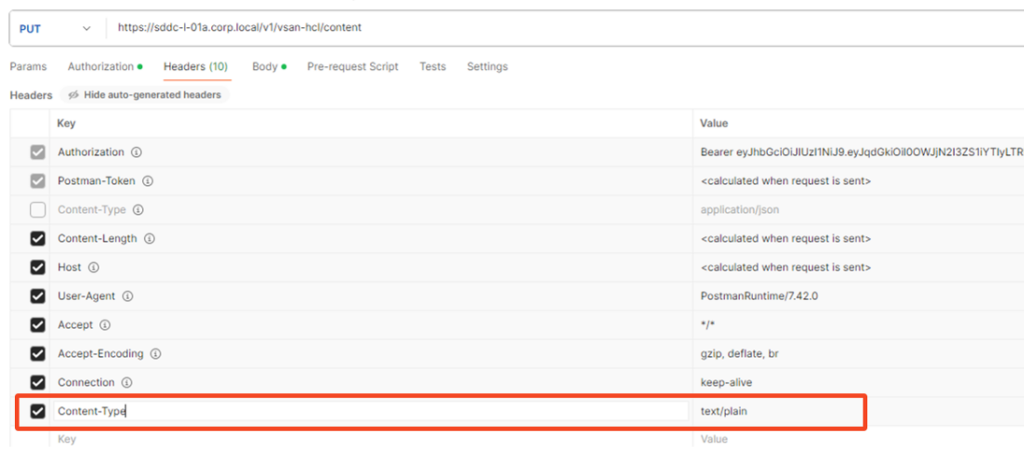

- Under Headers add a new Key

Content-Typewith Valuetext/plain

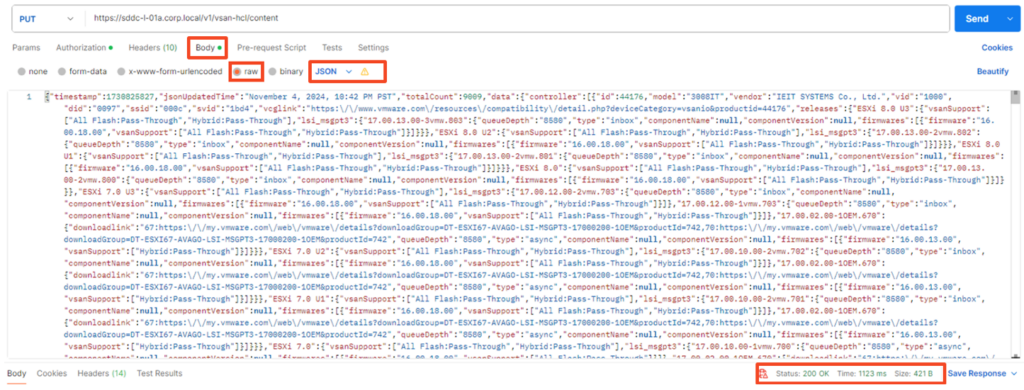

- Under

BodychooserawandJSON - In the field copy the content of the all.json file

- Hit

Send.

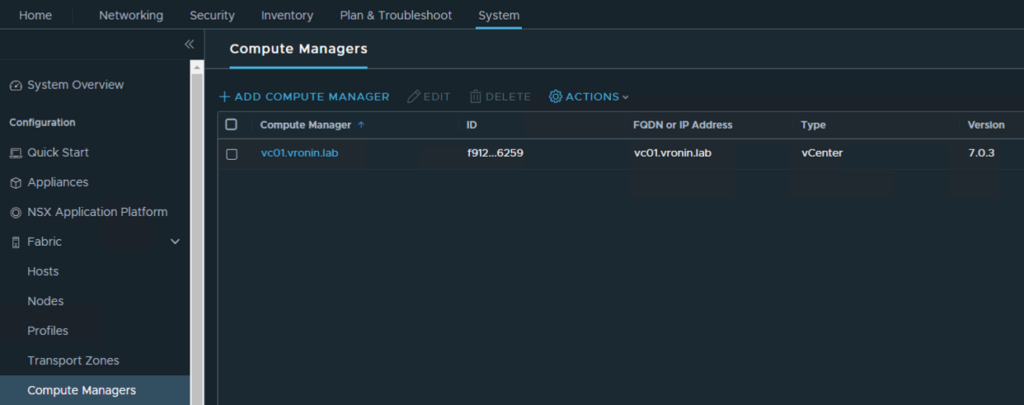

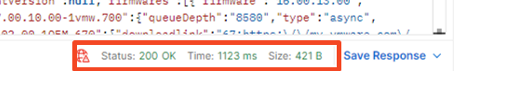

There is no output only a Status 200 OK

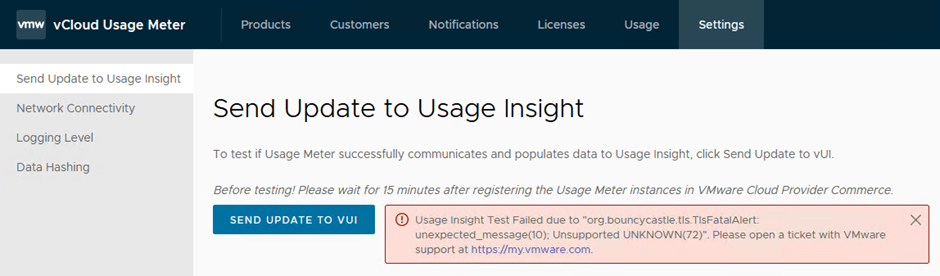

Let’s check if this particular pre-check error now is solved.

VICTORY!!